In this context, managers and decision makers need to think strategically about where AI may (or may not) make a difference to their company.

Internal ‘ownership’ and skills

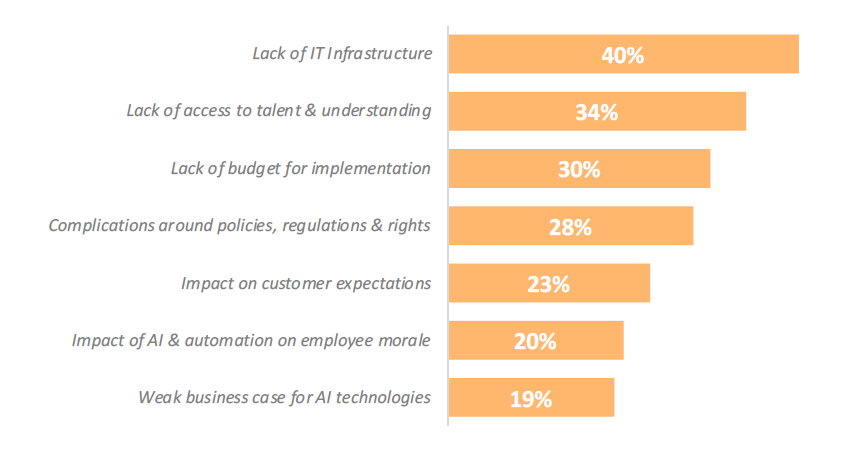

An important issue in many enterprises is who ‘owns’ AI? The natural answer would be that it should be the CIO. However, in recent years much software spending has moved outside the CIO’s realm, and so there might be too many people in an organisation that want a say in how, when and where AI will be deployed. The risk is that no one in the enterprise “owns” AI, which could slow down adoption. Another key challenge is finding talent i.e. people with the requisite technical ability to train AI systems, such as using customer support data to automate answers, or marketing data to refine and define campaigns. In a recent PwC Digital IQ survey, only 20% of executives said their organizations had the skills necessary to succeed with AI. This could be another bottleneck slowing down enterprise adoption.

Fear of robots and employee ‘buy-in’

Will employees be enthusiastic about training and leveraging AI to the fullest potential? An important task for enterprises will be to educate their employees about their plans and use cases for AI. According to the Teradata survey, many enterprises do not perceive AI to be a major threat to employees, with only 21% projecting AI will replace humans for most enterprise tasks in their organization by 2030. Hence it will be important to send the message that most AI-enabled productivity improvements in the next decade will come from automating monotonous tasks, which in turn enables workers to focus efforts on critical thinking and higher-level tasks.

Implementing and training AI is time-consuming and expensive

AI, like any other new technology, requires upfront investment in staff, training, advice and other resources. Hiring external consultants and training staff are only the first steps, usually followed by developing and implementing strategies on how, when and where to deploy AI in the enterprise. The actual implementation of AI/Deep Learning then requires another set of skills, mostly in the form of data scientists that need to ‘train’ AI systems. This training is time- and resource-consuming as it needs the creation and maintenance of massive training datasets to ‘feed’ into the AI system. The transferability of specific AI training and datasets to other similar tasks is not always possible, often requiring near-duplication of such training in the same department. The full picture and scale of how much resource AI deployments consume will not be known initially and could delay implementation and adoption.

The ‘black box’ issue

Another, more conceptual, issue with AI systems is the lack of ‘visibility’ and ‘explainability’. By that we mean the difficulty of knowing exactly how and why the system makes certain decisions. This will be important when it comes to critical areas such as accountability in the event that errors are made by the system with serious and/or far-reaching consequences. This issue is probably more pressing in AI use cases that are in the public limelight and/or under the scrutiny of regulators such as Autonomous Driving, Healthcare or AI systems deployed in Public Service. Yet, the larger and more complex an AI model is, the harder it will be to explain in hindsight why a certain decision was reached. Furthermore, as the application of AI expands, regulatory requirements could increase, thereby also driving the need for more explainable AI models.